Since the industrial revolution in England in the eighteenth century, the world has begun to transform itself into an era of modern technology and industries, clearly reflecting human life and well-being and the search for higher and more effective means of resting and enjoy life. When we mention the word industrial revolution, most of us believe that it was only one industrial revolution that took place in England. Now the world is witnessing a fourth industrial revolution.

The Fourth Industrial Revolution offers many unique growth opportunities for the different economies of the world, unlike the previous industrial revolutions that focused only on automating production processes and increasing earnings. The fourth industrial revolution has a completely different model: it is a combination of physical, digital and biological worlds.

In the context of this modern revolution, the integration of artificial intelligence and robotics into our daily life is accelerating. Artificial intelligence and machine learning will become an essential part of everything we use in our lives, such as home appliances, cars, sensors and drones.

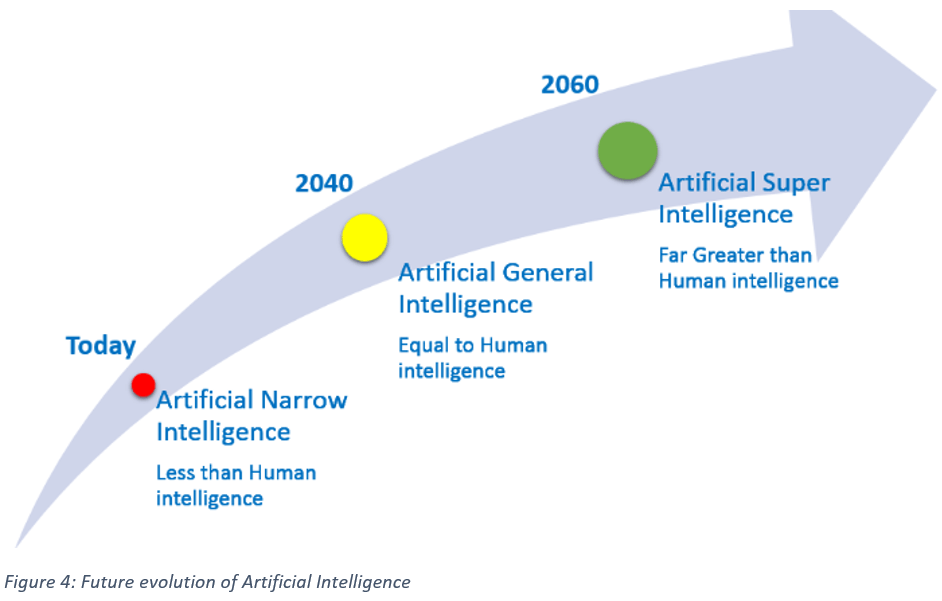

This new revolution, driven by the development of artificial intelligence in recent decades, unleashed an astonishing range of potentials, including the discovery of genetic mysteries and the opening of the human brain. These new technologies seek to find faster, more efficient, smarter and faster ways to accomplish different daily tasks or even, in other cases, to develop an artificial intelligence beyond human intelligence. How can we then define artificial intelligence?

Since the invention of computers and machines, their ability to perform various tasks has grown exponentially. A branch of computing called artificial intelligence is pursuing the creation of computers or machines as intelligent as human beings.

According to the father of artificial intelligence, John McCarthy, AI stands for “The science and engineering of intelligent machine manufacturing, especially intelligent computer programs”.

However, the precise definition and meaning of the word intelligence, and even more of artificial intelligence, is the subject of much discussion and confusion.

The definition of artificial intelligence in textbooks is based on two dimensions: the process of thinking and reasoning, and behavior.

Therefore, instead of looking at a general definition of artificial intelligence, we must restrict ourselves to definitions according to the dimensions mentioned above. These dimensions give many definitions classified in the following four categories: Systems that think like humans, systems that act like humans, systems that think rationally, and systems that act rationally.

The first two categories evaluate artificial intelligence in relation to human performance, while the last two categories measure it to an ideal concept of intelligence which is “rationality”.

Historically, the evolution of AI followed these four approaches. However, there is a strong tension between AI centered on the man and that which appeals to the concept of rationality. The first is defined as an empirical science based on hypotheses and experimental evidence. On the other hand, the rationalist approach is interested in creating systems based on the evolution of mathematics and engineering.

AI is developing with such incredible speed sometimes it seems magical. Researchers and developers believe that AI could become so powerful that it would be difficult for humans to control. Humans have developed AI systems by introducing all possible intelligences, in order to reduce the effort and time needed to perform the tasks they deem tedious.

Artificial intelligence has an explicit impact on economic growth since it directly affects the production functions of goods and ideas. However, the advance of artificial intelligence and its macroeconomic effects will depend on the potentially rich behavior of companies. Incentives and company behavior, market structure, and sectorial differences are directly affected by the presence of artificial intelligence and expert systems.

Other AI disciplines have emerged in recent years. Machine Learning, Deep Learning, Referral Systems, and Prediction Systems are more often spoken in companies. However, with these confusing designations, we cannot detect the difference between theseconcepts and their relationship with the concept of AI.

In this paper, we will try to shed light on these concepts and to try to define the relation between the notions that connect them.

Artificial intelligence is the future, artificial intelligence is science fiction, and artificial intelligence has become part of our daily lives. All of these statements are true. They only depend on the perspective of artificial intelligence.

When Google Deep Mind’s Alpha Go defeated South Korea’s Lee Se-Dol in the GO game in early 2016, Artificial Intelligence, connected to Machine Learning and Deep Learning, was used in the media to explain how Google’s Deep Mind won over Lee Se-Dol, but in fact these three terms (artificial intelligence, learning machine, and deep learning) are not the same thing.

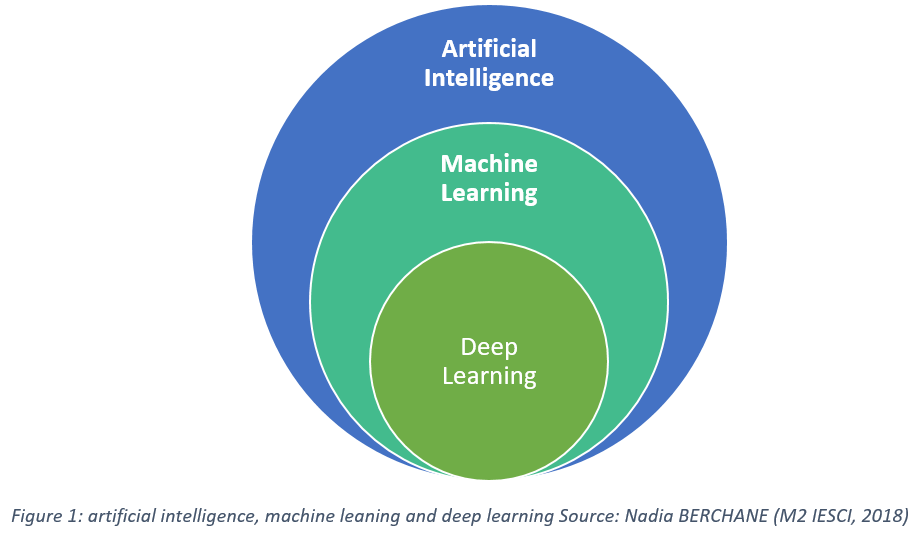

To illustrate the relationship between these terms we can use concentric circles:

- Artificial intelligence AI: The larger circle is the idea that emerged first in this field

- Machine learning: In the middle it flourished later after the AI

- Deep Learning: The smallest circle is an expansion of AI at present

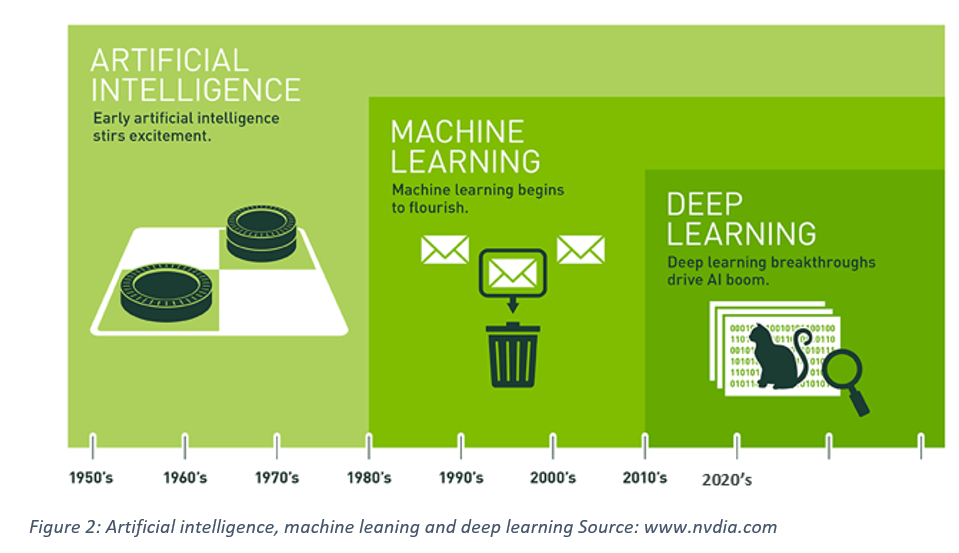

One of the best visual representations of this relationship: those found on the Nvidia code (Artificial Intelligence Computing Leadership). It provides a good starting point for understanding the differences between artificial intelligence, machine learning and deep learning.

Artificial Intelligence

Artificial intelligence emerged for the first time when a group of computer scientists announced at the Dartmouth Conference in 1956 the birth of artificial intelligence, and since then artificial intelligence has become the harbinger of a bright technological future for human civilization.

Artificial intelligence has expanded significantly over the past few years, especially since 2015 thanks to the availability of GPUs that can perform parallel processing faster, cheaper and more powerful, in parallel with virtually infinite storage capacity and large data flow of all kinds such as images, financial transactions, map data and many more.

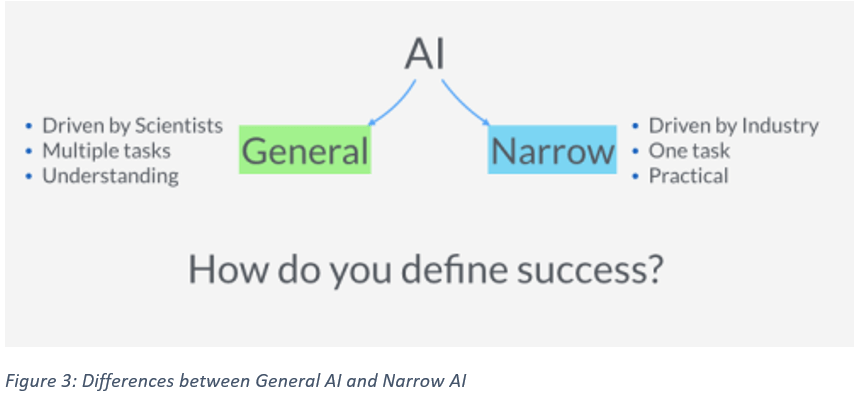

In 1956, the dream of the pioneers of artificial intelligence was to build complex machines based on new computers with the same characteristics as human intelligence. This concept was called “General AI”, which is a machine that possesses all human senses and ideas. The aim was to build a machine that thinks as we do.

However, what we can create are machines called “Narrow AI”, which are techniques that can perform specific human quality tasks or even better. An example of Narrow AI is operations such as Image Classification in the Pinterest application or Face Recognition on Facebook.

Computer scientists have known artificial intelligence in different ways, but in its core, artificial intelligence involves machines that think in the way humans think. Of course, it is difficult to determine whether the machine is thinking or not! Building artificial intelligence on a practical level involves building a computer system that is better at “doing” things than humans.

In the last decade, artificial intelligence has moved from science fiction to reality. News such as IBM’s Artificial Intelligence Watson winning the program of the prestigious American competitions Jeopardy and Google Artificial Intelligence defeating the world champion in Go, redefines artificial intelligence to the forefront of public awareness.

Today, every technological giant invests in artificial intelligence projects, and most of us interact with artificial intelligence programs every day every time when we use smartphones, social media, search engines, and e-commerce sites. One type of artificial intelligence that we interact with a lot is “machine learning”.

Machine learning

The simplest definition of machine learning is the use of a set of algorithms to analyze data, learn from it, and then determine or predict a set of events. Instead of writing code for a specific program using a set of instructions to accomplish a task, machines are trained with a set of data and algorithms that gives it the ability to learn how to execute a task.

Machine learning came from the early pioneers of artificial intelligence, the methods used for learning algorithms that included learning through Decision Tree, Inductive Logic Programming, Clustering, Reinforcement Learning, Bayesian networks, and other algorithms.

One of the best areas of machine learning applications for the coming years is computer vision. The main purpose of the computer vision concept is to enable a machine to analyze processes and understand one or more images taken by an acquisition system. It uses the classification method by building classifiers, such as Edge Detection Filters, where the program can determine the beginning and the end of a particular image, or the Shape Detection program to determine whether an object consists of 8 ribs, for example.

Other filters include the classifier to identify characters such as “S-T-O-P”. Through all previous programs, an algorithm can be developed to understand the image and learn how to determine if the traffic signal is a stop signal.

The algorithm we talked about contains some errors, especially on a foggy day when the traffic signal is not clearly visible or a tree obscures part of the traffic signal. Computer Vision and Image Detection did not come close to competing with humans until very recently. It was very fragile and made many mistakes, but this subject developed greatly with Deep Learning.

Many companies that provide online services use machine learning to build their own recommendation engines. For example, when Facebook decides what to show in your newsfeed, when Amazon determines what products you might want to buy, when Netflix offers movies you may want to watch. All these recommendations are based on predictions based on patterns in their current data.

Currently, many businesses are beginning to use machine learning capabilities in predictive analyzes. Big data analysis has become more popular as well as machine learning and has become a key feature of many analytical tools.

Indeed, machine learning became more relevant to statistics, evidence extraction, and predictive analyzes. Some argued that it should be classified as a field separate from artificial intelligence. However, systems can provide artificial intelligence features such as natural language processing and automation. Machine learning systems do not necessarily require any other artificial intelligence properties.

Other people prefer to use the term machine learning because they think it seems more technical and less frightening than the term artificial intelligence. In one comment on the Web someone said that the difference between the two terms is that ” the machine learning actually works.”

Deep Learning

Deep Learning is a new area of Machine Learning research, which has been introduced with the objective of moving Machine Learning closer to one of its original goals: Artificial Intelligence.[1]

Thanks to deep learning, AI has a bright future. Indeed, deep learning has enabled many practical applications of machine learning and by extension in the overall field of AI. Deep Learning breaks down tasks in ways that makes all kinds of machine assistance seem possible. Driverless cars, better preventive healthcare, even better movie recommendations, are all here today or on the horizon. AI is the present and the future. With deep learning’s help, AI may even get to that science fiction state we have for so long imagined.[2]

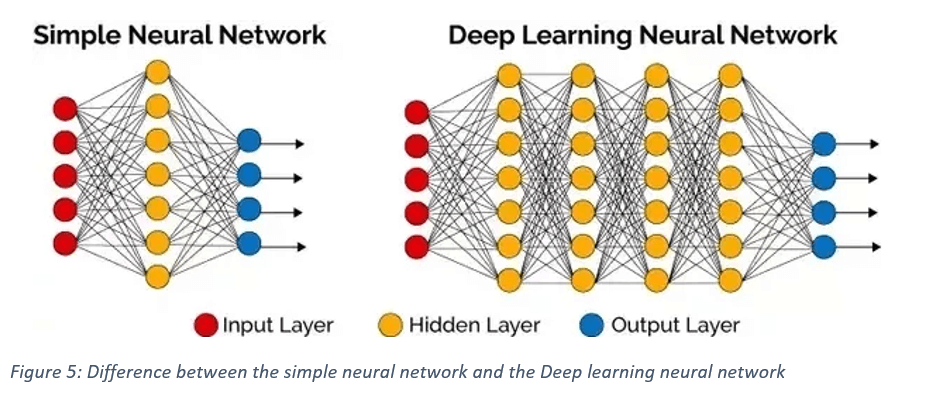

The neural network algorithm has recently emerged, inspired by the biological structure of our brain and its interconnections between neurons. However, unlike the biological brain where any neuron can bind to any other neuron, these neural networks are linked in specific directions of data diffusion. For example, you can take a picture, divide it into a group of sections, insert it into the first layer of the neural network, and then pass to the second layer, which performs a specific task and so on until we reach the last layer, the output layer from which we get the final outputs.

Each neuron assigns a specific weight to its input. The type of task performed determines this weight; the result determines the value of the required weights. The example of the traffic signal is usually given to explain the concept. The signal image is divided into set properties. These properties are examined by neuronal cells, to determine whether this signal is a stop signal or otherwise depending on the probability theory.

In our previous example, the system was 86% sure that it was a stop signal, 7% as a speed limit marker and 5% as not a signal. The structure of the network is here to determine whether the previous results are true or false.

From the previous example, it is likely that during the network’s training process it brings many false answers at first. The training process requires thousands of images so that the weights at the entrances of neuronal cells are accurately adjusted to obtain a correct answer every time we test them, regardless of the peripheral state of the sign (fog, sun, rain, etc …). At this stage, the neural network has taught itself how the traffic signal looks.

Andrew Ng was one of the first to use deep learning in Google where he developed an application called Herding cats. This app was developed to capture images of all cats on YouTube videos. Andrew Ng adopted the expansion of the neural network by increasing the number of layers and nodes, and then passed a huge amount of data on the neural network to train them, but in this case, the data were images extracted from about 10 million videos from YouTube.

Today, image recognition systems trained by deep learning have become better than humans, beginning with cat identification down to identify leukemia markers and tumors from magnetic resonance imaging. Google’s Alpha Go has used all of the previous techniques to master the Go game by training the neural compass and playing with itself repeatedly.

Deep learning has made many machine-learning ideas workable by expanding the field of artificial intelligence auto-driving, better preventive health care and many other services. Artificial intelligence is the present and the future. With its, it is possible to achieve things that we considered science fiction in the past.

Limits of artificial intelligence and machine learning: deep learning, neural networks, and cognitive computing

Of course, machine learning and artificial intelligence are not the only terms associated with this field of computer science. IBM often uses the term “cognitive computing”, which is a synonym with the term artificial intelligence.

But some other terms have a unique meaning. For example, artificial neural networks are a system designed to process information in ways similar to biological brainwork methods. Things can be confusing because neural networks tend to be particularly good at learning the machine, so these terms are sometimes confused.

In addition, neural networks provide the foundation for deep learning, a kind of machine learning. Deep learning uses a set of machine learning algorithms that work in multiple layers. This has been made possible, partly, by systems that use graphics processors to handle a large amount of data at the same time.

If you are confused by all these terms, you are not alone. Computer scientists continue to discuss their precise and disciplined definitions and will probably continue for more time. As companies continue to pump more money into artificial intelligence research and machine learning, more terminology will likely appear to add more complexity to these issues.

IBM seeks to model artificial intelligence similar to the human brain

In keeping with developments in artificial intelligence, companies and developers want to restructure the fundamentals of artificial intelligence by testing “human intelligence” and studying how machines and software can imitate it. IBM has embarked on this ambitious task of teaching artificial intelligence to act like the human brain.

The many existing “machine learning” systems have been built to derive information from sets of data, which may be used to solve problems, such as the Chinese game of Go, or to identify “skin cancer” through images, but that is limited and different from the human brain.

Human beings learn gradually, they learn from their daily lives. As humans receive information throughout their lives, their minds adjust to accommodate information that is different from most existing artificial systems, as well as the logic of human beings, and the skills they have to solve problems that artificial systems do not work in dealing with it.

IBM is seeking to develop these smart human-like thinking systems, as well as a team of researchers from Google’s Deep Mind, which has created a “neural network” that uses “logical reasoning” to perform tasks.

The network was able to pass the test by recognizing the target with a precision of up to 96% compared to the modest score achieved by traditional machine learning models ranging from 42% to 77%. The advanced network was able to deal with the “language problems” being developed in full swing, the researchers also develop the ability of the network to observe and retain memories as well as reasoning skills.

The future of artificial intelligence can be expanded through these strategies. “The learning of neural networks needs a lot of hard work to get the best design,” said Irina Erich, a member of IBM’s research team in an interview with Engadget. And it would be great if those networks were able to build themselves.”

By Nada and Nadia Berchane, students in competitive intelligence at the University of Angers

Bibliography

[1] Medium, Calum McClelland, The Difference Between Artificial Intelligence, Machine Learning and Deep Learning, Dec 4, 2017, www.medium.com

[2] FICO, Advanced Artificial Intelligence and Machine Learning, www.fico.com

[3] Quora, Youssef KHASHEF, What is the Difference between Neural Networks and Deep Learning?, Dec 19, 2015, www.quora.com

[4] DZone, Dawn PARZYCH, Artificial Intelligence vs. Machine Learning vs. Deep Learning, Sep 22, 2017, www.dzone.com

[5] RIA, Robotics Online, Applying Artificial Intelligence and Machine Learning in Robotics, Jan 11, 2018, www.robotics.org

[6] TNW, Tristan GREENE, IBM and Unity are teaming up to bring Watson’s AI to VR and AR games, www.thennextweb.com

[1] Deep learning, moving beyond shallow machine learning since 2006, www.deeplearning.net

[2] What’s the difference between artificial intelligence, machine learning and deep learning? www.nvidia.com